Shulkhan: a touch interface for the printed Talmud

made by Joseph Tepperman

for the Powered by Sefaria contest (Honorable Mention)

August 2020

An explanation

All through my daily Gemara learning, I find myself turning to Sefaria again and again. When I get stuck, I look up translations in the Steinsaltz edition or check individual word definitions in Jastrow. And when I want to dig deep, I save interesting passages in Sefaria's Sheets, or look for commentary and parallel texts.

When it comes to Jewish texts, there's only one thing Sefaria doesn't have: the printed books themselves. My love for physical paper books is a large part of why I started learning Gemara every day, and there's just something undeniable about the iconic layout of the Vilna edition:

I know of no other book more beautiful, intricate, or baffling. Rashi and Tosafos argue across columns, and all the smaller commentaries peek in from the margins, arranged like some kind of strange map. Even the typesetting holds a type of lesser sanctity: there are stories of tzadikim who could stick a pin through one word, then through multiple pages, and predict the exact word the pin would hit when it came out the other side. If nothing else, the layout provides an excellent mnemonic device, organizing abstract concepts into concrete, 2D space.

So, to combine a reverence for the Vilna Shas with a general need for English translation, my idea was this: to access Sefaria directly from the Vilna Shas page itself. What if, by pointing to a word or a line, I could highlight the section I was interested in, then project its translation directly onto the table in front of me, without ever having to open my computer or break out my phone? While Sefaria connects me to other texts and to a community of other learners, learning Gemara is so demanding that I find my focus is best when I stick to the printed page. Someone once said the Talmud is the original hypertext. If so, it's ideally suited not just for modern technological adaptations, but for embodying the technology itself.

This is all by way of explaining how I found myself, during 2020's COVID-19 pandemic, tying a down-facing projector to a shelf above my desk, clamping a webcam to the side of that desk, then spending a couple of months programming something I've decided to call Shulkhan.

As much as I wish I came up with the idea of projecting onto books and using the pages themselves as primitive touchscreens, it turns out the concept of Augmented Paper dates back to the 1990s, if not earlier. I've seen research papers suggesting it could be used for mundane things - like annotating other research papers. I've also seen impressive videos of museum installations and "smart tables" and holographic children's books. As far as I know, Shulkhan is the first Torah-centered application for this technology, but hopefully not the last.

Who is the intended audience for Shulkhan? Learners like me, I guess. Anyone with an attachment to the printed page of the Vilna Shas, plus a need for more than what's on the page, and a fatigue with smartphones and tablets. Of course, if you prefer the paper Gemara there are many excellent printed translations. I suppose there's something aspirational in the Shulkhan project: the idea that translation can become less of a crutch and more like training wheels. On the other hand, every Rabbi I've ever met needs to look up a strange word or an obscure turn of phrase from time to time. And translation is only the first, and most obvious, version of what Shulkhan can do.

How Shulkhan works

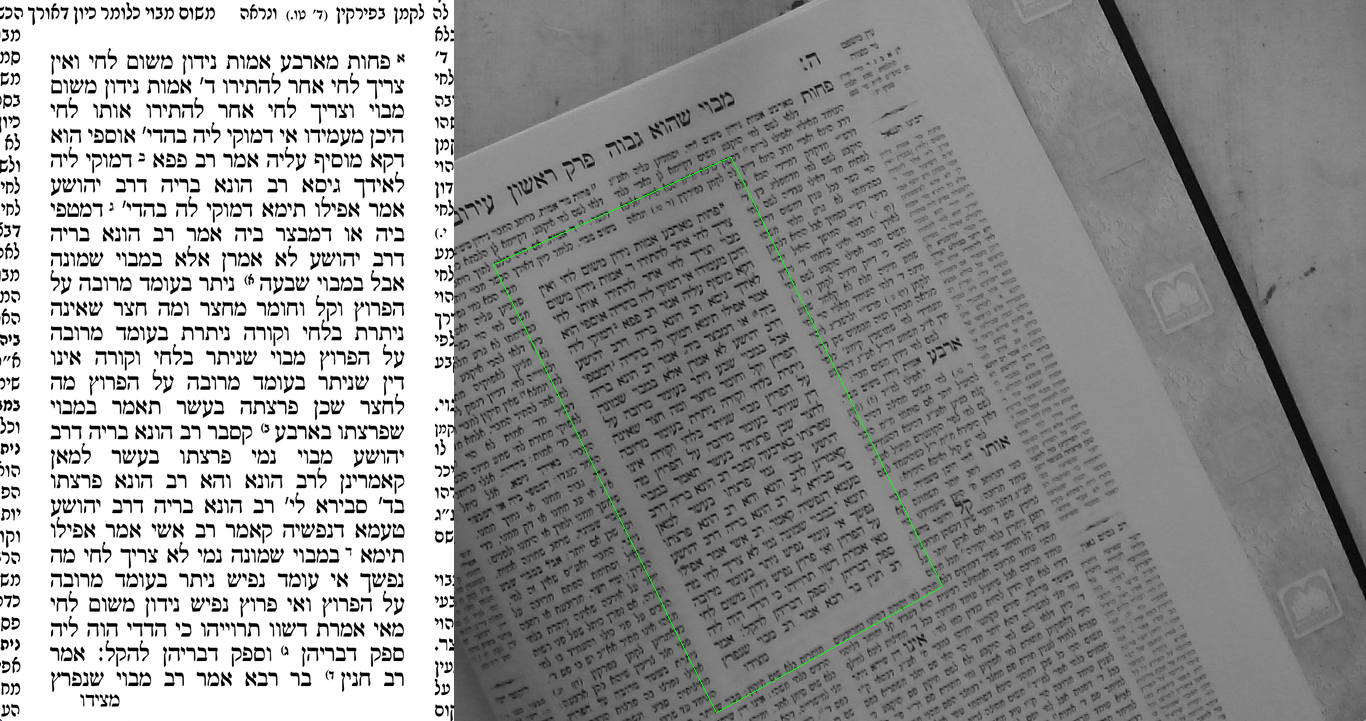

The camera captures realtime video footage of the printed page lying open on the table. In each frame of video we detect the main block of Gemara (i.e. not Rashi or Tosafos) by comparing the whole frame (right) to a smaller template image (left). The green rectangle shows the area that matches the template.

Recall that for a given page, the layout of the Vilna Shas will be roughly identical from one edition to the next. Offline, the template image has already been segmented into words and text lines using open-source OCR software, and these words and lines of the template have already been connected to Sefaria's database of translations through their Search API. In other words, we already know a spatial mapping from the template image to its English translation. Once the template is detected in a frame of live video, we can connect the physical book to these translations, too.

Using a standard computer vision algorithm called RANSAC, we estimate a 2D warp (a "homography") between this template and the live video, so that every pixel in the template corresponds to a pixel in the video, even when the book in the video is at an angle or slightly distorted.

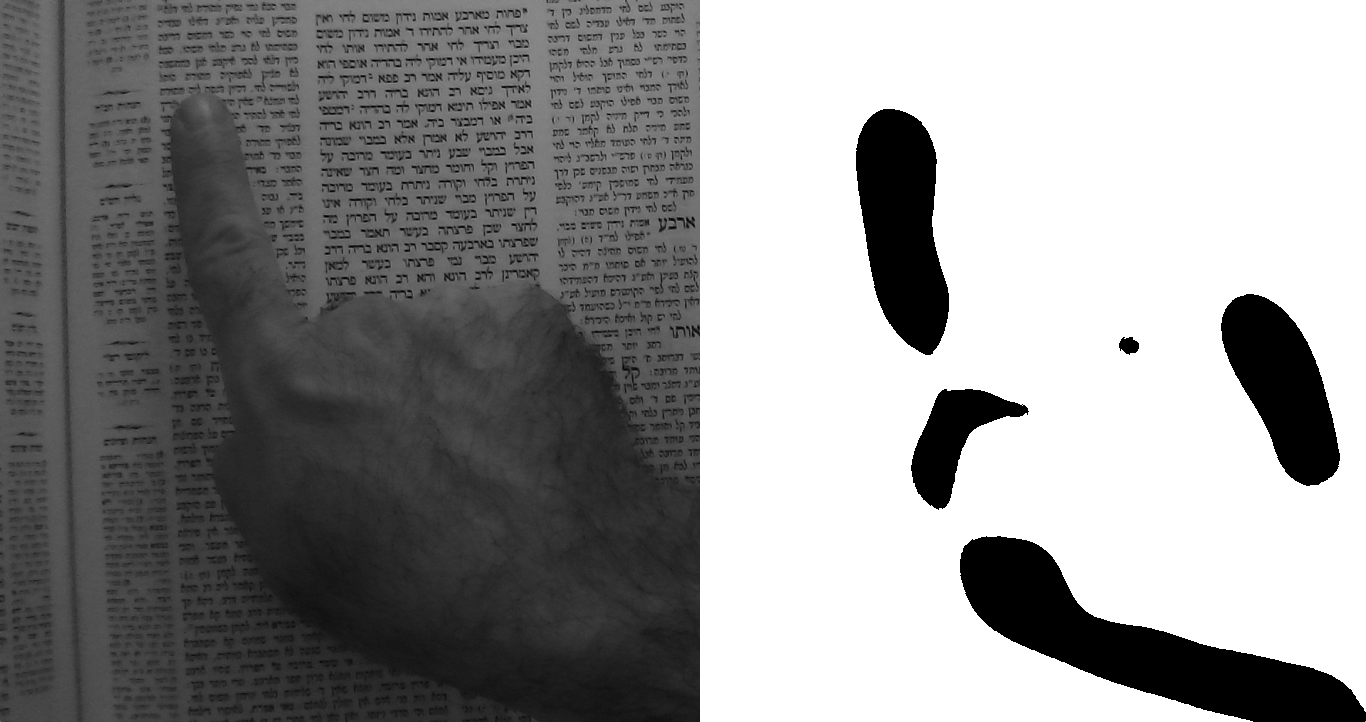

Simple gesture recognition detects the presence of the user's finger on the page. We can compare the current video frame to a reference/background frame by converting both frames from grayscale to black and white, subtracting the reference, then blurring the result to remove any noise and obtain a few generalized blobs that represent the user's hand:

In the blurred result, we assume the topmost black pixel is the location of the word being pointed to. The projector's highlighting is in blue not only because it's easy on the eyes but because blue can be filtered out of the RGB camera image components and won't be mistaken for a hand gesture.

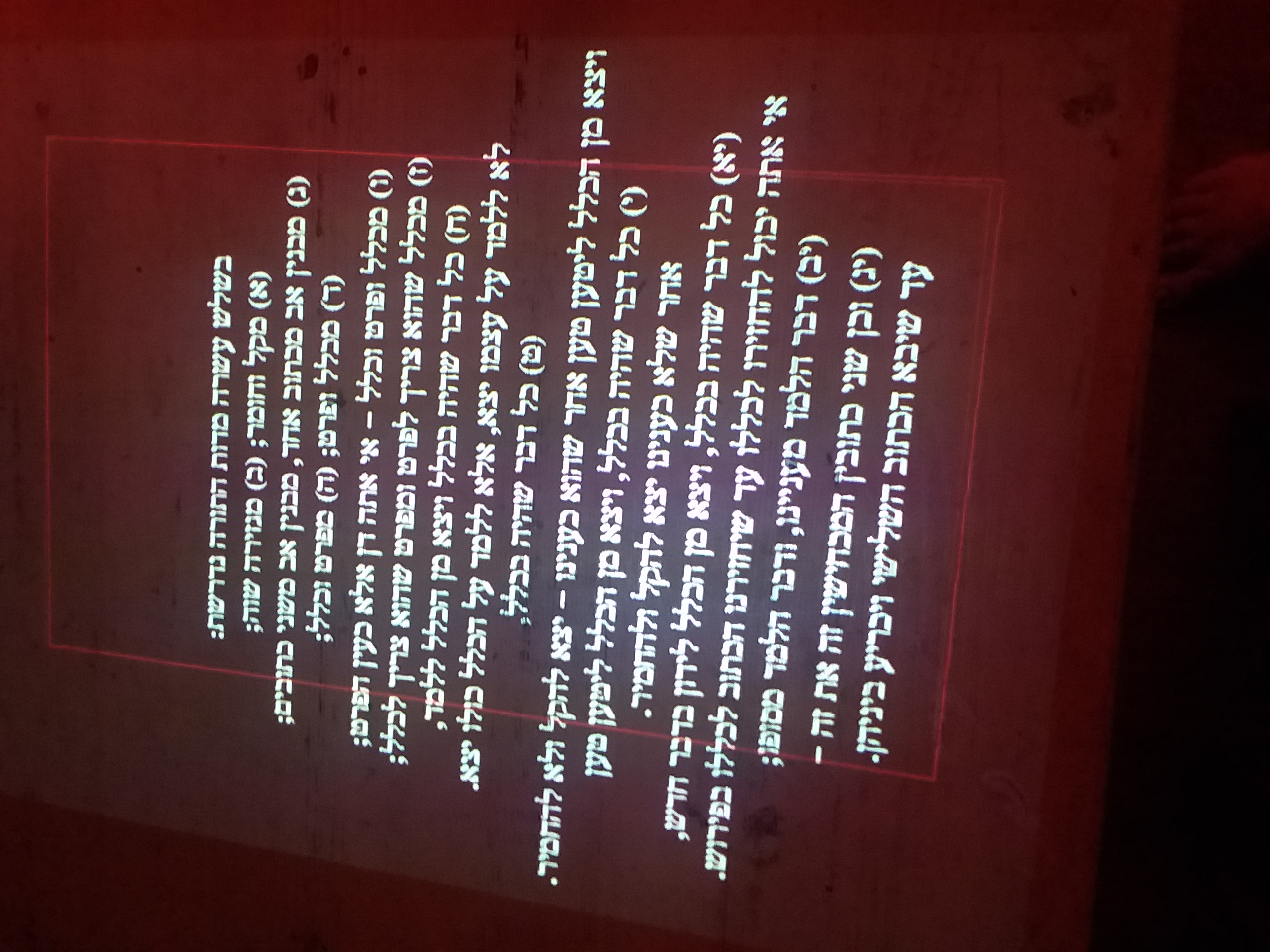

Of course, before we can project any highlighting onto a book, the projector and camera have to be calibrated with respect to one another, to compensate for any rotation or scaling differences between them. To do this, we can use the same template-matching algorithm mentioned above, but this time leading to a different 2D warp - one that maps the camera eye to the plane of the projection. The projector shows an image of known dimensions, then the camera captures whatever it sees, detects the known image, and gives its orientation from the camera's point of view. This way we can estimate the relative offset between the two, and the projector can "know" where the camera eye begins and ends, and compensate accordingly.

For the calibration template image I chose the famous opening of Sifra, although I could have chosen anything, text or otherwise, as long as it's distinct enough to be detected automatically:

Here the red box represents the camera's eye, large enough to fit the page of Gemara, while the projection extends beyond it.

One other very important component is calibrating the camera itself, to remove the slight fisheye distortion most cheap lenses suffer from. There are numerous tutorials online explaining how this is done, and it proved to be indispensible for this project. The fisheye effect can make certain text lines appear slightly curved when they are actually straight, and any highlighting derived from these false curves will look "off" when projected onto the real, uncurved book. This animation illustrates how much the camera image can change with and without calibration:

Hardware

- the webcam is a standard off-brand 1080p HD thing with manual focus and automatic exposure compensation. It costs about $50 and connects through USB

- the camera mount is also standard and costs about $15

- the projector, a ViewSonic Pro8200, I bought about 10 years ago for a couple hundred dollars - it is designed for home cinema, has 1080p resolution and HDMI inputs, but it's far from high-end

- the table is just a table I found on the street - someone was getting rid of it

- this all runs on my MacBook Air laptop from 2012, no fancy graphics processor required

Software

- Shulkhan is programmed in C++

- I wrapped it in the Cinder library because of Cinder's fast and convenient methods for image processing

- Tesseract is my open-source OCR software of choice (including their pre-trained Hebrew text model)

- some basic computer vision algorithms are provided by the openCV library

- as mentioned above, I use the Sefaria Search API v2 to get the translations

- template images of the Gemara provided by hebrewbooks.org

Current Limitations

- requires the user to specify which page of Gemara it is (future work: automatic daf retrieval)

- doesn't handle Rashi/Tosafos, but Sefaria doesn't generally have translations for them anyway

- needs to be evenly lit, not too bright or too dark

- sensitive to large 3D distortions, e.g. excessive page curvature